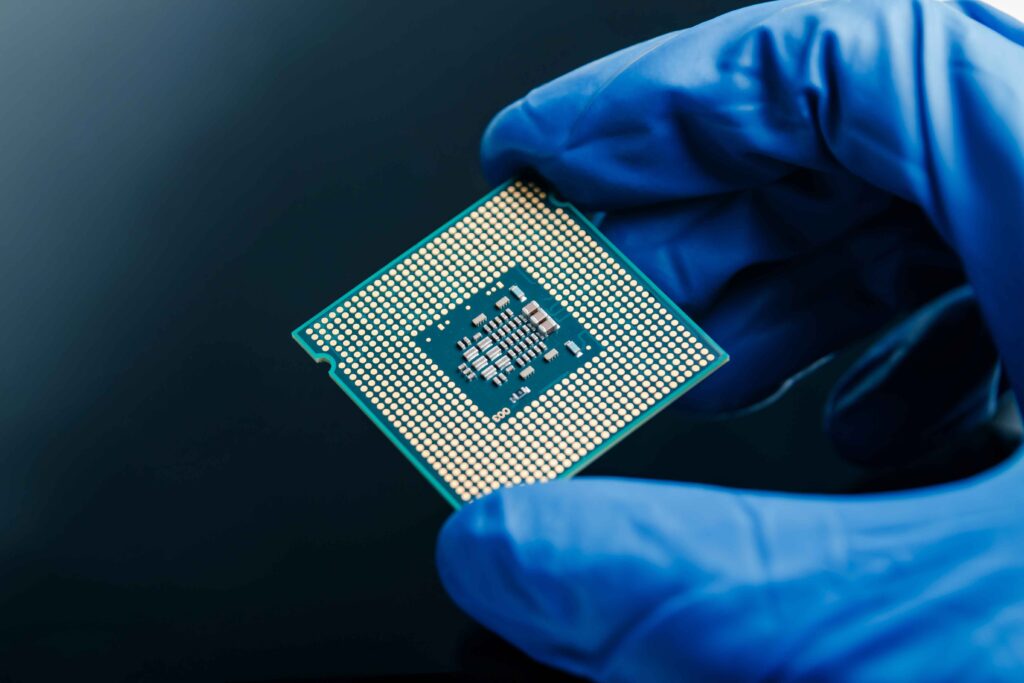

The microchip, also known as a microprocessor, is a small electronic component that plays a vital role in modern technology. It acts as the brain of computers and many other devices, enabling them to process information and perform tasks. The creation of the microchip revolutionised technology and has transformed how we live and work.

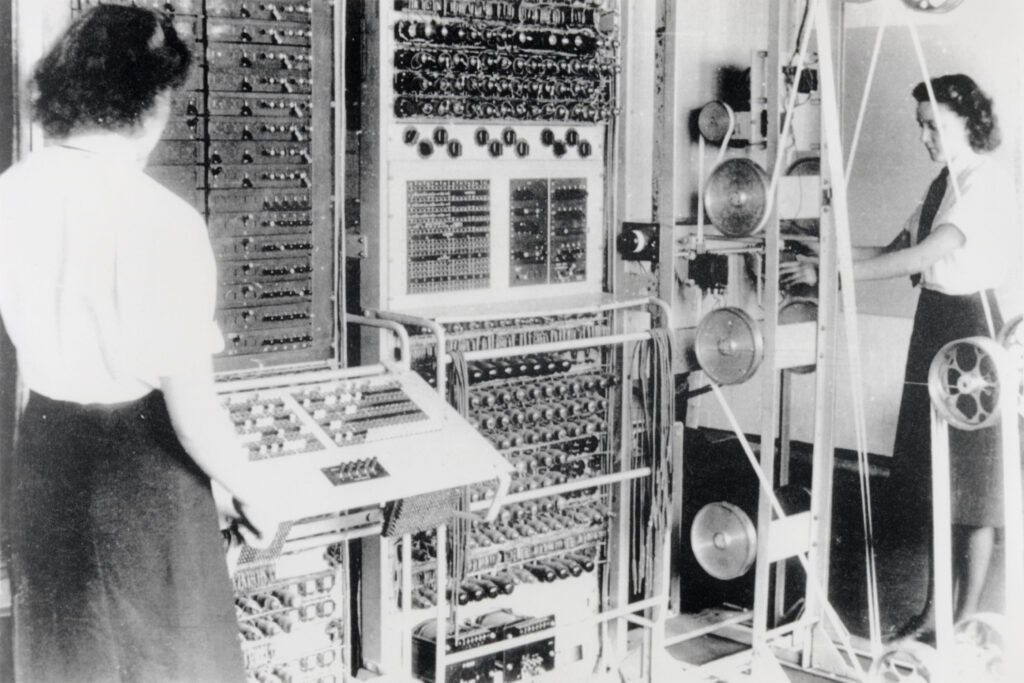

The concept of the microchip began in the 1950s. Computers were large machines that used numerous vacuum tubes and transistors. These early computers were bulky, expensive, and not very efficient. The idea of integrating many transistors onto a single piece of silicon emerged as a solution to these problems.

In 1958, American engineer Jack Kilby created the first integrated circuit while working at Texas Instruments. This breakthrough allowed multiple electronic components to be combined into one small chip. Around the same time , Robert Noyce, co-founder of Intel, developed a similar concept independently.

In 1968, he founded Intel, which would become one of the leading companies in microchip production. The work of Kilby and Noyce laid the foundation for the modern microchip, and their innovations were crucial in the computer revolution that followed. The United States was a major pioneer in microchip technology, but other countries also played significant roles. Japan became a key player in the 1970s and 1980s, with companies like NEC and Toshiba developing advanced semiconductor technologies.

In recent decades, South Korea and Taiwan have emerged as leaders in microchip production, with companies such as Samsung and TSMC becoming significant players in the global market today. Today, billions of microchips are produced each year. The demand for chips is driven by their use in various devices, including smartphones, computers, cars, and household appliances. Estimates suggest that over a trillion microchips are manufactured annually, highlighting their importance in our daily lives.

How does a microchip work?

QUESTION:

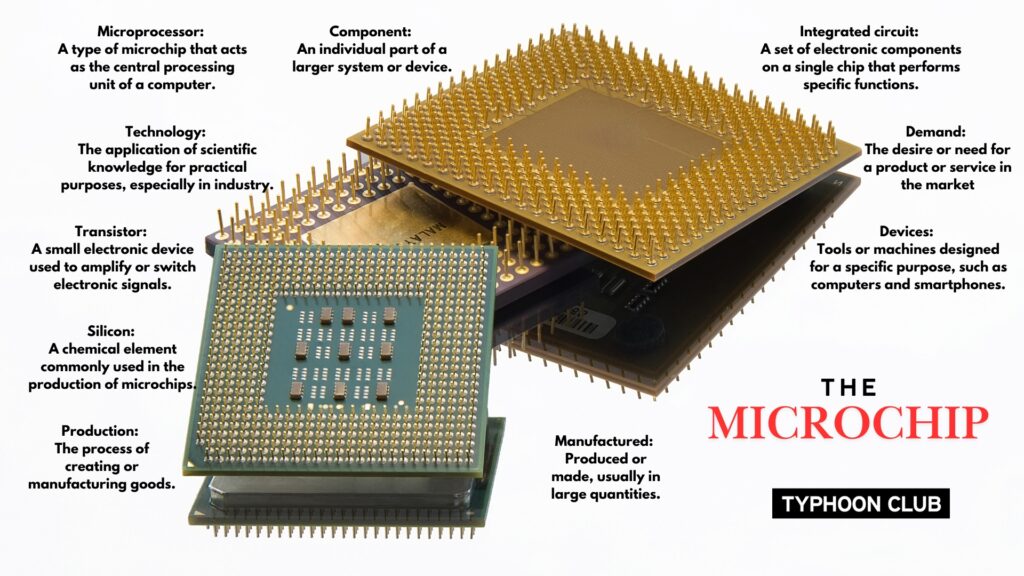

VOCABULARY: